Teams around the world are building ever more sophisticated artificial intelligence systems of a type called neural networks, designed in some ways to mimic the wiring of the brain, for carrying out tasks such as computer vision and natural language processing.

Using state-of-the-art semiconductor circuits to simulate neural networks requires large amounts of memory and high power consumption. Now, an MIT team has made strides toward an alternative system, which uses physical, analog devices that can much more efficiently mimic brain processes.

The findings are described in the journal Nature Communications, in a paper by MIT professors Bilge Yildiz, Ju Li, and Jesús del Alamo, and nine others at MIT and Brookhaven National Laboratory. The first author of the paper is Xiahui Yao, a former MIT postdoc now working on energy storage at GRU Energy Lab.

Neural networks attempt to simulate the way learning takes place in the brain, which is based on the gradual strengthening or weakening of the connections between neurons, known as synapses. The core component of this physical neural network is the resistive switch, whose electronic conductance can be controlled electrically. This control, or modulation, emulates the strengthening and weakening of synapses in the brain.

Read more at Massachusetts Institute of Technology

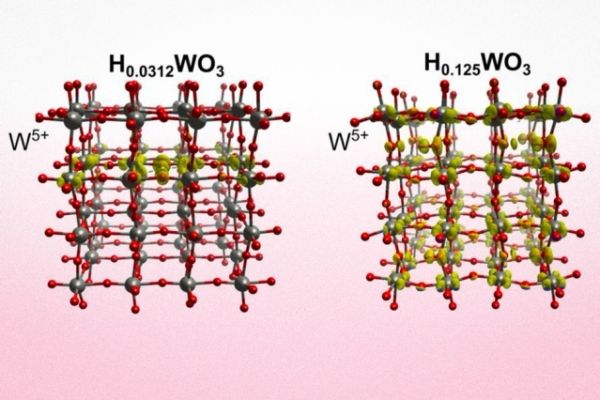

Image: A new system developed at MIT and Brookhaven National Lab could provide a faster, more reliable and much more energy efficient approach to physical neural networks, by using analog ionic-electronic devices to mimic synapses.

Courtesy of the researchers