Due to its complexity, researchers have come to rely on a combination of experiments, semi-empirical turbulence models, and computer simulation to advance the field. Supercomputers have played an essential role in advancing researchers’ understanding of turbulence physics, but even today’s most computationally expensive approaches have limitations.

Recently, researchers at the Technical University of Darmstadt (TU Darmstadt) led by Prof. Dr. Martin Oberlack and the Universitat Politècnica de València headed by Prof. Dr. Sergio Hoyas started using a new approach for understanding turbulence, and with the help of supercomputing resources at the Leibniz Supercomputing Centre (LRZ), the team was able to calculate the largest turbulence simulation of its kind. Specifically, the team generated turbulence statistics through this large simulation of the Navier-Stokes equations, which provided the critical data base for underpinning a new theory of turbulence.

“Turbulence is statistical, because of the random behaviour we observe,” Oberlack said. “We believe Navier-Stokes equations do a very good job of describing it, and with it we are able to study the entire range of scales down to the smallest scales, but that is also the problem—all of these scales play a role in turbulent motion, so we have to resolve all of it in simulations. The biggest problem is resolving the smallest turbulent scales, which decrease inversely with Reynolds number (a number that indicates how turbulent a fluid is moving, based on a ratio of velocity, length scale, and viscosity). For airplanes like the Airbus A 380, the Reynolds number is so large and thus the smallest turbulent scales are so small that they cannot be represented even on the SuperMUC NG.”

Read more at Gauss Centre for Supercomputing

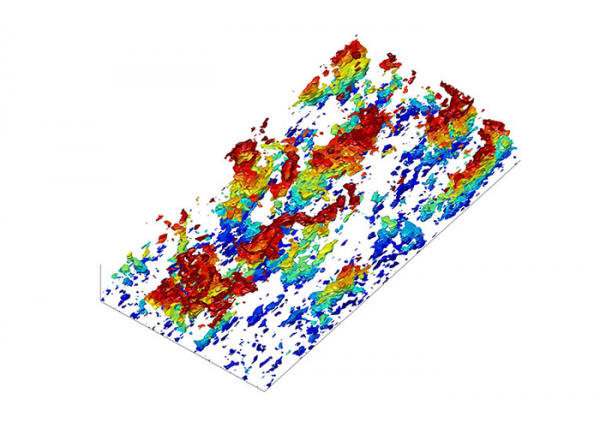

Imgae: Detail of the UV-structures in the logarithmic layer, coloured by their distance to the wall. (Credit: Sergio Hoyas)